My projects

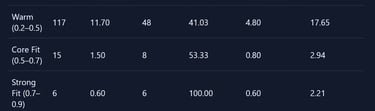

Predict Open Rate of Email subject line using NLP and Machine learning:

Developed a predictive model utilizing Machine Learning and Natural Language Processing (NPL) to predict the open rate of email subject lines. Leveraged org internal data and a random forest regression model. This was used in optimizing subject lines.

Result: Decision made easy for Project Owner, Faster in-market, Hundreds of hours in time and cost savings.

Libraries used: Scikitlearn, NLTK

Model Accuracy: 74%

Identify Phishing Email using NLP and Machine learning:

Developed a thesis project aimed at crafting a predictive model that employs Machine Learning and Natural Language Processing (NLP) to detect phishing emails. A large dataset of over 210k records was used to train the model. The model achieved an accuracy of 91.85% with Adaboost ensemble with Random Forest base. Thesis paper will be made available as needed. Codes are available in GitHub.

Result: Successful identification of phishing emails improving org cyber security.

Libraries used: Scikitlearn, NLTK

Model Accuracy: 91.85%

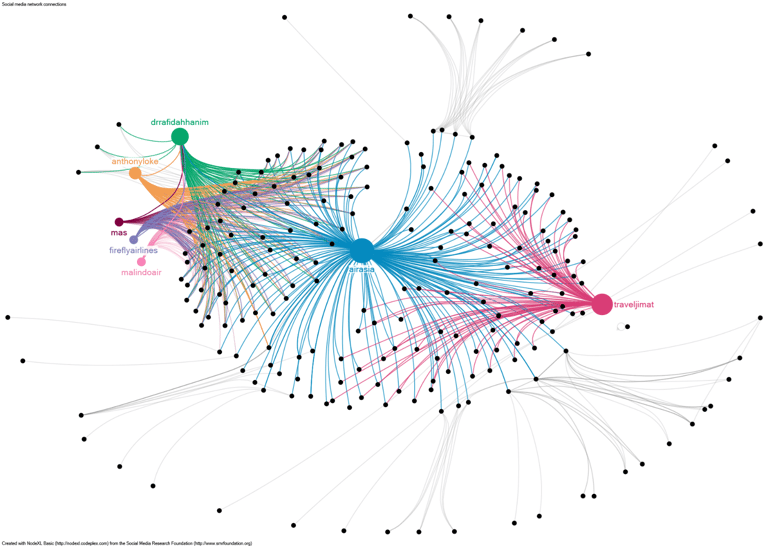

Leveraging Social Network Analysis (SNA) for AirAsia’s marketing strategy:

Using Social Network analysis methods to profile influential figures within social networks, leverage user-generated content, and formulating successful strategies. The project used NodeXL on AirAsia’s social network data (Twitter), analyzing Centrality degree, Betweenness, Closeness, In-degree, and Out-degree metrics to detect influential figures, their specific influence within the network, and the extent of their influence. Subsequently, this data as used to develop recommendation of marketing strategy for AirAsia’s Twitter campaign. The study report will be made available if needed.

Tools used: NodeXL

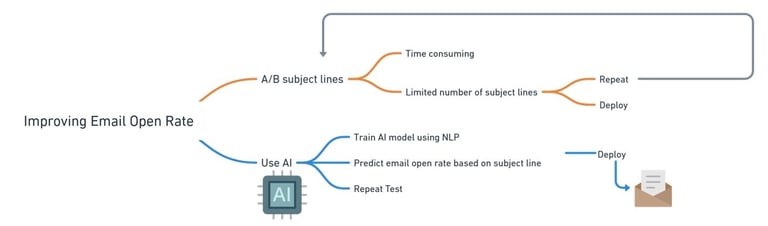

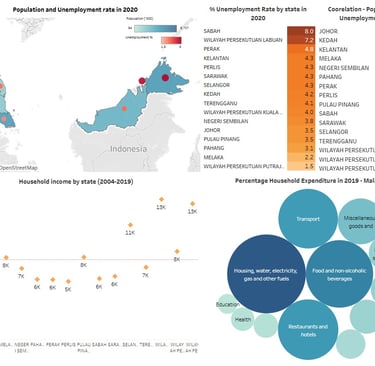

Data Visualization and Study of Unemployment in Malaysia:

Conducted a study and utilized data visualization techniques to analyze unemployment in Malaysia, aiming to implement upskilling initiatives for individuals from lower-income backgrounds to enhance employment prospects. The study involved data from the Malaysian Statistical Institute, exploring several factors influencing unemployment and uncovering the potential cause of unemployment. You can access the Tableau Data Visualization through the link.

Result: Identified Malaysia States where unemployment can be improved, factors contributing to unemployment, forecast of unemployment, proposed solutions to improve unemployment in focus states.

Tools used: Tableau Prep, Tableau Desktop

Next Best Action in Customer Journey using Machine learning:

Designed a model using Machine Learning to identify the most suitable action/path for customer engagement within their journey, drawing from previous interactions. Utilized org internal data and a AI model.

Result: Rich Customer Experience, Improved customer engagement

Libraries Used: Scikitlearn

Tools use: Python, Salesforce Marketing Cloud

Model Accuracy: 85%

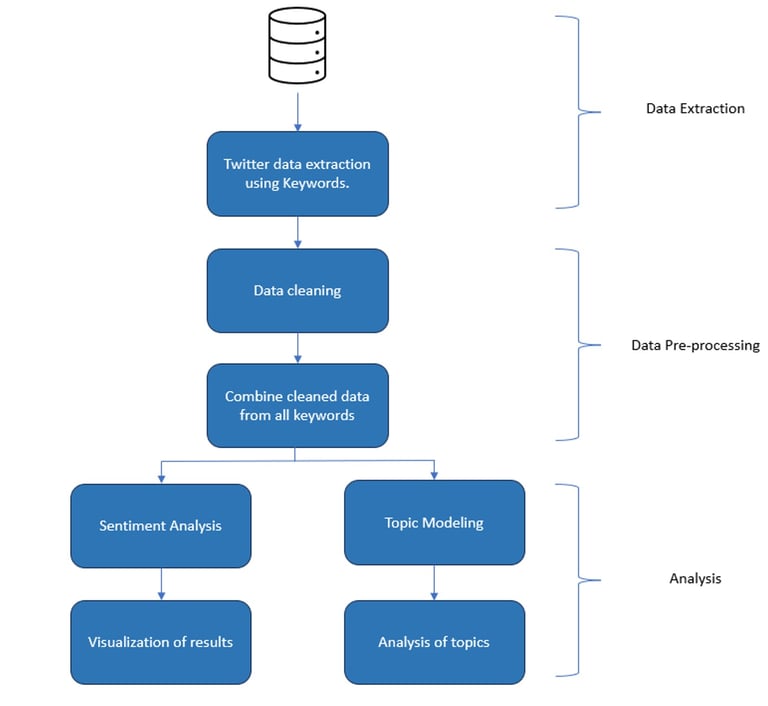

Sentiment Analysis about hybrid workplace as the future of work:

Employed Topic modeling and Sentiment analysis techniques in RapidMiner to understand the main topics discussed by public users about their experience and value of working from home and their perception of hybrid workplace.

Result: The analysis revealed that 61.8% of sentiment expressed was positive indicating favorable perception of hybrid work.

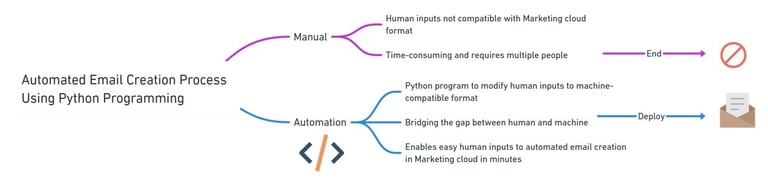

Automated Email Creation process using Python programming:

Automated the email creation process in Salesforce Marketing Cloud using Python programming, streamlining the workflow for efficient deployment.

Result: Faster email builds, reduce errors, Hundreds of hours in time and cost saving.

Tools used: Python, Salesforce Marketing cloud

Machine Learning for High Frequency algo trading:

Developed Machine Learning model to predict stock price in live action for high frequency trading in the India stock market. Used python programming for APIs, stock information and order placements. The program achieved an accuracy of 99% and requiring 40 milliseconds to execute orders. Part of the program is available in GitHub (excluding the strategy).

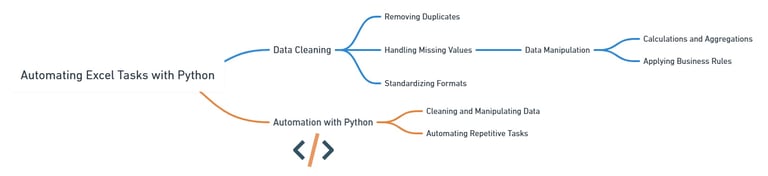

Automated Data Cleaning and Manipulation with Python:

Using Python to automate the repetitive tasks of collecting, cleaning, and formatting registrant information instead of using Excel, saving significant time and effort, making the workflow more efficient and freeing up valuable time for other tasks.

Result: Faster data processing for Leadops, Hundreds of hours in time and cost saving.

Tools used: Python

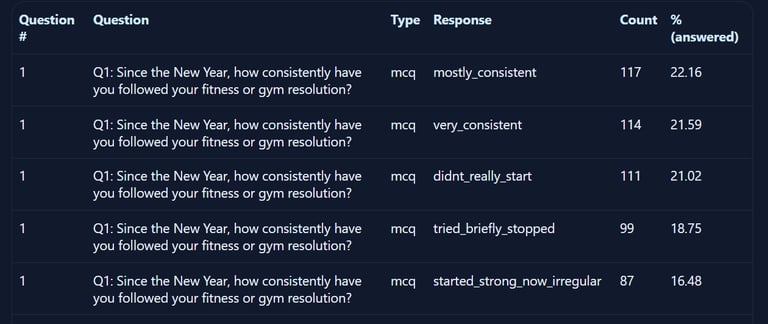

Consumer Behavior Simulation (Poll Simulation)

Developed an AI-driven poll simulation engine that models diverse human decision-making behaviors and latent traits to simulate how different audience segments respond to surveys, questions, and options. The system supports dynamic question logic, drop-off modeling, and response distribution analysis.

Result: Reduced time to launch surveys from days to minutes. Enabled pre-campaign testing without real user exposure. Identified question bias and contradiction effects before deployment. Improved survey quality by highlighting confusing or polarizing questions. Enabled what-if testing of audience mixes and behaviors

Libraries used: Flask, NumPy, Pandas, Matplotlib / Altair

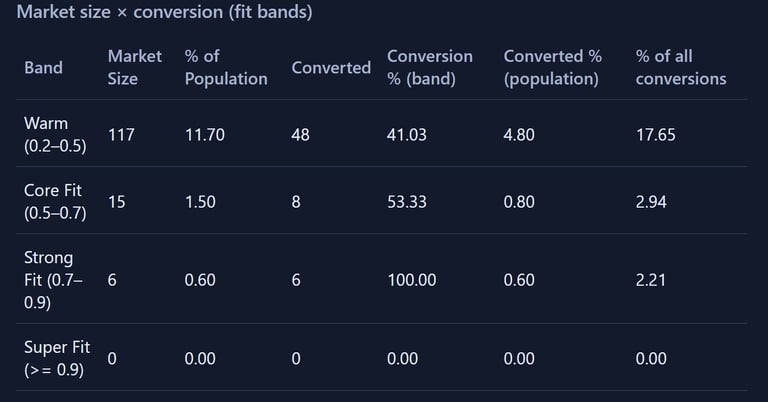

Engagement & Conversion Simulation

Built an engagement and conversion simulator that models how different user personas progress through funnels, campaigns, or multi-step interactions. This allows experimentation with audience mix, behavior strategies, and messaging before real-world deployment.

Result: Prevented over-optimistic conversion assumptions. Helped align expectations between product, marketing, and sales. Enabled data-backed campaign sizing and forecasting

Libraries used: Flask, NumPy, Pandas, Matplotlib / Altair

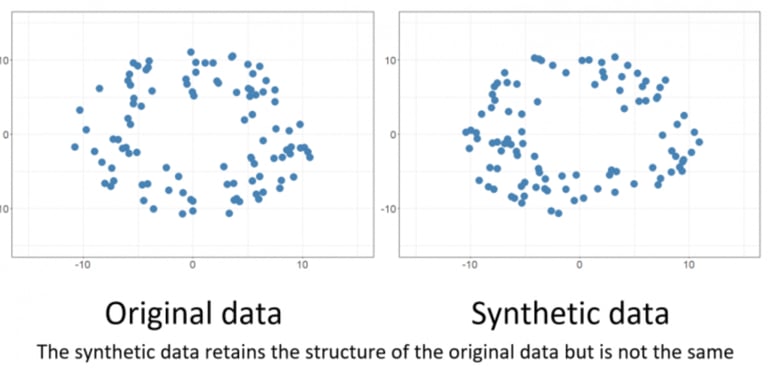

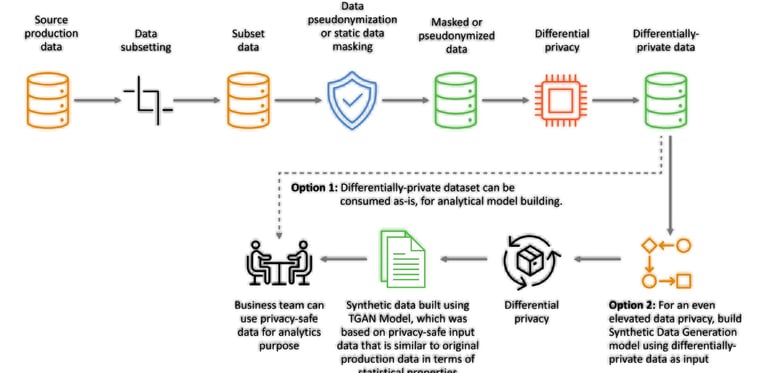

Created synthetic data generation pipelines using statistical and generative modeling techniques to produce privacy-safe datasets that preserve real-world patterns. These datasets are used for testing, simulation, analytics, and model training without exposing sensitive data.

Result: Reduced dependency on real production data. Accelerated analytics and ML development cycles. Enabled safe experimentation without compliance risk

Libraries used: NumPy, Pandas, SDV / CTGAN

Synthetic Data Generation